ChatGPT is trending again—this time for creating new mathematics.

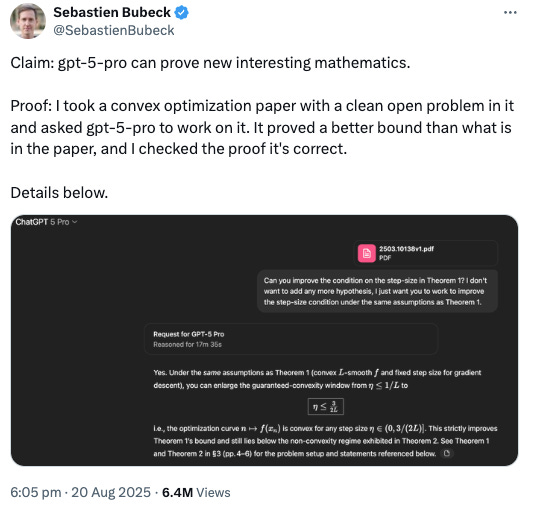

Sebastien Bubeck, a computer scientist, gave GPT-5-Pro an open problem in convex optimization—a problem humans had only partially solved.

After reasoning for 17 minutes, the model produced a proof that improved the known bound from 1/L to 1.5/L.

According to Bubeck, this wasn’t copied or memorized; it was an original result. Humans later extended the bound to 1.75/L, but GPT-5 independently advanced the frontier.

If true, this is significant. ChatGPT has long been criticized for struggling with even basic math. Now, it might have contributed to research-level mathematics.

However, the claim has sparked controversy. Critics note that Bubeck is a senior OpenAI researcher and likely involved in GPT-5’s development.

Others argue the math is not entirely novel, the problem remains unsolved, and similar approaches have been published before.

Why the Debate Matters More Than the Math

Here is why the debate matters more than the breakthrough: domain expertise is essential when using AI.

Most people, including many debating this online, can not verify whether the math is correct or whether the claim holds up.

This is a bigger issue for AI users. If you work in medicine, science, or software development and rely on AI without enough expertise and knowledge, you risk accepting incorrect outputs.

In some fields, you can fact-check or learn enough to validate results. In others—advanced math, cryptography, certain coding tasks—that is way harder.

So it is not whether GPT-5 made new math; it is that AI can generate convincing, authoritative outputs that most users can not easily verify.

That should make us cautious and more critical.

Thank you for reading.